Machine Learning Jet Physics at CERN

A series of works close to my heart was on using machine learning to discriminate between different physics processes at the Large Hadron Collider. Our work on hierarchical clustering facilitated the advent of sophisticated ML techniques in deciphering some fundamental physical processes. The idea is that scattering processes leave behind a signature, or trail, of the underlying particles involved in the process. We determined from the data (now public) that the energy deposition in the LHC's calorimeters is the most discriminating feature, and the deposition pattern is the signature of these particles.

Short-term Stock Q-Trader

It is almost everyday that I wish I could reverse time and buy the stock yesterday that just rose today by an inexplicable 200%. With such hope, I tried to build an implementation of Q-learning applied to (short-term) stock trading. The model uses n-day windows of closing prices to determine if the best action to take at a given time is to buy, sell or sit. As a result of the short-term state representation, the model is not very good at making decisions over long-term trends, but is quite good at predicting peaks and troughs. Shown below are performances on Alibaba (BABA) and Apple (AAPL).

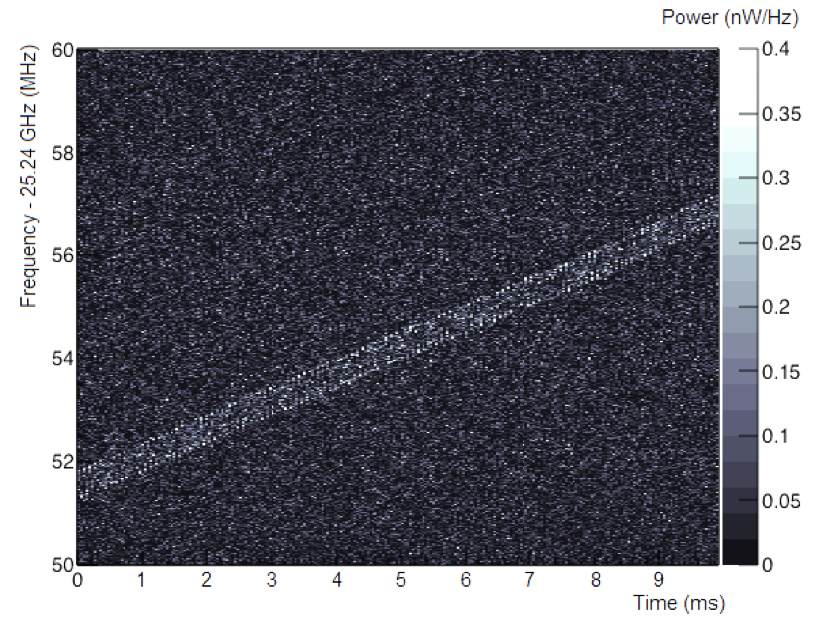

Using ML for Spectroscopy Signal Classification

This work on identifying a multitude of complex electron signal structures was done in collaboration with the University of Mainz, Germany. With machine learning models, we develop a scheme based on these traits to analyze and classify cyclotron radiation emission spectroscopy signals. Proper understanding and use of these traits will be instrumental to improve cyclotron frequency reconstruction and boost our ability to achieve world-leading sensitivity on the tritium endpoint measurement in the future.

Q-learning for Cliff Walking

Cliff walking is a standard undiscounted episodic task with start and goal states, and the usual actions of going UP, DOWN, LEFT or RIGHT. The reward is -1 on all transitions, except in the red "cliff" region. Stepping into this region incurs a reward of optimal path -100 and sends the agent instantly back to the start. The graph below shows the performance of the Sarsa and Q-learning methods with epsilon-greedy action selection (epsilon=0.1). The Jupyter notebook with an interactive code is here.

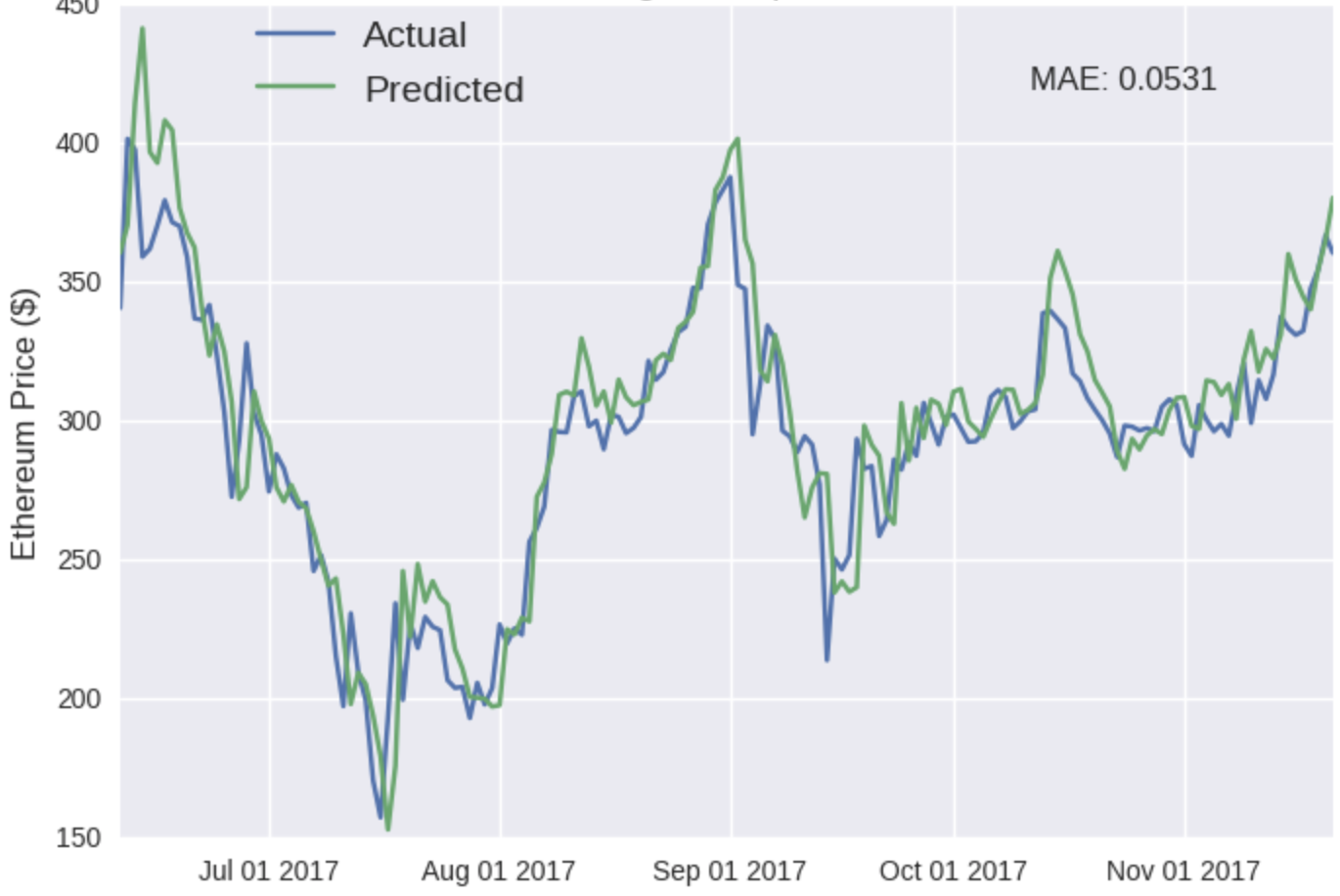

Predicting Cryptocurrency prices

After the idea of blockchain had started gaining traction, I was very quickly interested in knowing how it worked independent of the banking structure, and to perhaps make some money out of what most economists called a bubble, perhaps rightfully so. From coinmarketcap.com, we download the features of top 10 the cryptocurrencies by market cap. We’re going to employ a Long Short Term Memory (LSTM) model: our LSTM model will use previous data (both BTC and ETH) to predict the next day’s closing price of a specific coin. The Jupyter notebook can be found here.

Home Advantage in Soccer Leagues

Motivated by David Sheehan's blog post on home (dis-)advantage in soccer games across the world, I tried to understand whether factors like "psychological" can truly be extracted using ML. The underlying emperical obserbation is that the home team tends to score more goals than the away team. We define a "home field advantage score", which roughly accounts for the fact that the home team tends to score more goals than the away team: e.g., a score of 2-0 is better than 2-1, etc. The results below are for the UK Leagues and other European Leagues.